Under the Digital Services Act (hereinafter: “DSA“), due diligence obligations have been imposed on intermediate service providers for a transparent and secure online environment.

Due to the fact that the concept of indirect service provider includes many, different types of entities (often of different structure and size), as well as the fact that the concept of indirect service is itself broad and includes a catalog of services with different specificities, the legislator recognized that due diligence obligations must be tailored to the type, size and nature of the intermediary service in question, and therefore a catalog of basic duties – that is, duties that apply to all providers of intermediary services – and, in addition, additional duties – that apply to particular types of providers of intermediary services, due to the specificity and size of the services they provide – was singled out. Basic duties should be performed by an intermediate service provider of any type.

The basic due diligence obligations of intermediate service providers include:

- Designation of points of contact.

Indirect service providers are required to designate a single electronic point of contact and to publish and update relevant information regarding this point of contact. The imposition of such an obligation on intermediate service providers is aimed at ensuring smooth communication between the provider and the recipient of the service, as well as between the provider and member state authorities, the European Commission, the European Digital Services Board.

Unlike a legal representative, a point of contact does not have to have a physical location, it is a virtual place. A point of contact can serve duties imposed under various other laws, not just under the AUC. Information on points of contact must be readily available and updated on the supplier’s website.

The point of contact for service recipients should, first and foremost, allow them to communicate directly and quickly with the intermediary service provider, electronically, in a user-friendly manner, including by allowing service recipients to choose their means of communication, which must not rely solely on automated tools. In practice, this means first and foremost that the service recipient should have a choice of at least two communication tools, one of which must not rely solely on automated tools.

- Appointment of legal representative.

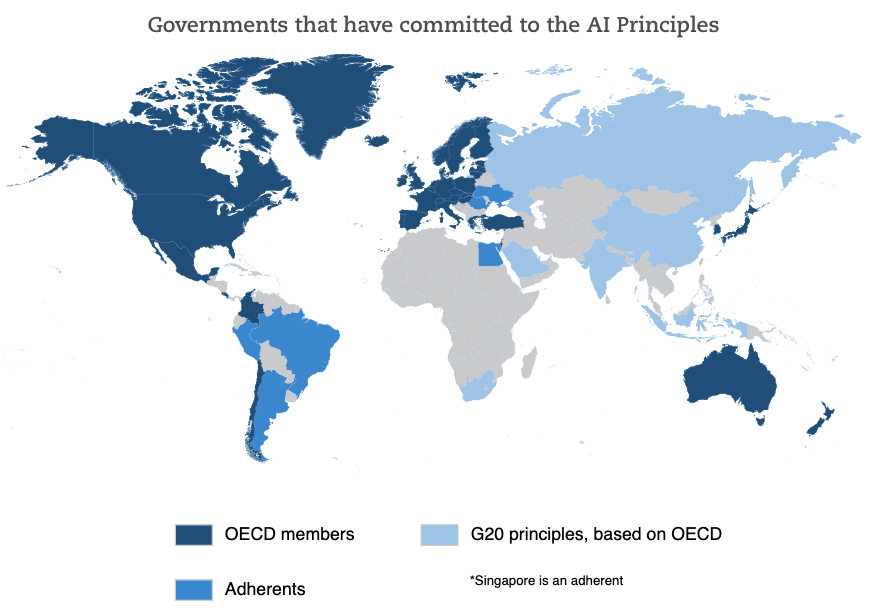

Indirect service providers that are based in a third country (i.e., outside the EU) and offer services in the European Union should appoint a legal representative in the EU with sufficient authority and provide information on their legal representatives to the relevant authorities and make such information public.

Indirect service providers shall specifically designate in writing a legal or natural person to act as their legal representative in one of the member states where the provider offers its services. The legal representative may represent one or more intermediate service providers.

A legal representative is not only an attorney for service of process in matters related to the issuance of DSA decisions by the authorities. He must also be able to cooperate with the authorities, respond to summonses received. The legal representative should receive authorizations for actions that ensure compliance with the decisions of the competent authorities.

In order to fulfill the obligation to appoint a legal representative, intermediate service providers should ensure that the appointed legal representative has the authority and resources necessary to cooperate with the relevant authorities. Adequate resources should be viewed as appropriate competence and experience, as well as having the relevant organizational, legal or technical capabilities to perform such a role.

- Include in the terms and conditions of service information on restrictions on the use of services.

Indirect service providers should include in their terms of service (i.e., regulations that are part of user contracts, for example) information on any restrictions they impose on the use of their services with respect to information provided by recipients of the service. Such information must include an indication in terms of any policies, procedures, measures and tools used for content moderation, and should also include information on the rules of procedure for handling complaints internally. The Digital Services Act formulates an additional requirement that the aforementioned information be provided in a manner that is simple and understandable to the recipient, and that the information be machine-readable.

Providers of intermediate services directed primarily at minors (e.g., because of the type of service or the type of marketing associated with the service), should make a special effort to explain the terms of use in a manner that is easily understood by minors.

Special obligations related to the inclusion of restriction information in the terms of service were imposed on intermediate service providers qualifying as very large online platforms or very large search engines. The rationale for imposing additional obligations was primarily cited as the need for such large entities to provide special transparency regarding the terms of use of their services. Providers of very large web browsers and very large search engines, in addition to the obligation applicable to all types of intermediate service providers to provide terms of use, are also required to, among other things: provide a summary of such terms, make the terms available in the official languages of all member states in which they offer their services.

- Reporting obligations.

The AUC also imposes an annual reporting obligation on intermediate service providers for any content moderation they have done during the period.

The report should include, among other things, the following information:

- Number of warrants received from member state authorities;

- Number of notifications made under DSA Article 16;

- Relevant and understandable information on content moderation done on the suppliers’ own initiative;

- Number of complaints received through internal complaint handling systems in accordance with the provider’s terms of service;

- Any use of automated means for content moderation.

The European Commission has the authority under the DSA to adopt implementing acts to establish templates setting forth the form, content and other details of the reports, including harmonized reporting periods. The Commission is currently working on the adoption of such a template.

***

Want to learn more about the basic obligations of intermediate service providers under the Digital Services Act? For more, check out the publication by our law firm’s advisors: [Link to publication].